Qualitywatch

A Nuffield Trust and Health Foundation programme

Latest from QualityWatch

QualityWatch is a Nuffield Trust and Health Foundation programme providing independent scrutiny into how the quality of health and social care is changing over time.

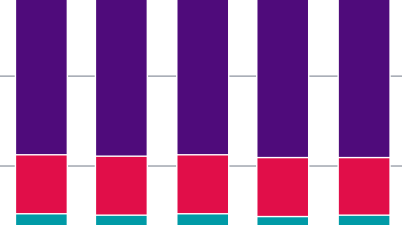

Declining access to social care

Read QualityWatch's annual statement, which focuses this year on access to adult social care. Presenting a range of key numbers and statistics to build a picture, it shows how the ability of the state to provide quality care that older people can access is changing for the worse.

Trending indicators

Here are some of our most popular indicators showing how care quality has changed over time. Head over to the 'Indicators' section to view them all alongside hundreds of other measures of quality in health and social care.

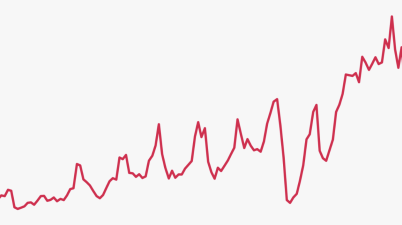

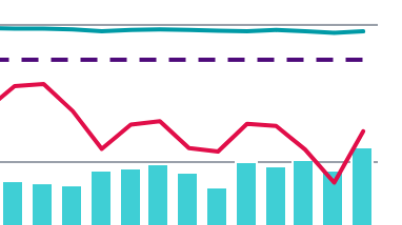

Cancer waiting times

Indicator

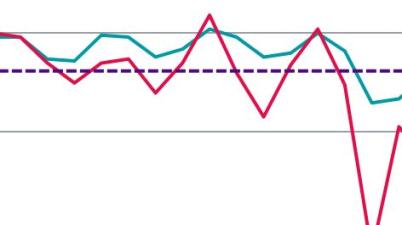

Ambulance response times

Indicator

A&E waiting times

Indicator

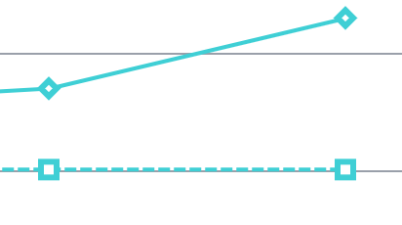

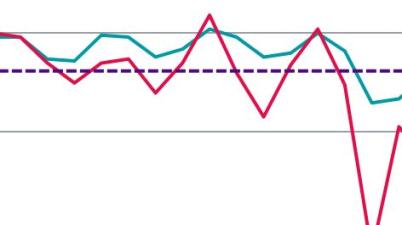

Effectiveness of sexual health services

Indicator

Cancer waiting times

Indicator

Effectiveness of sexual health services

Indicator